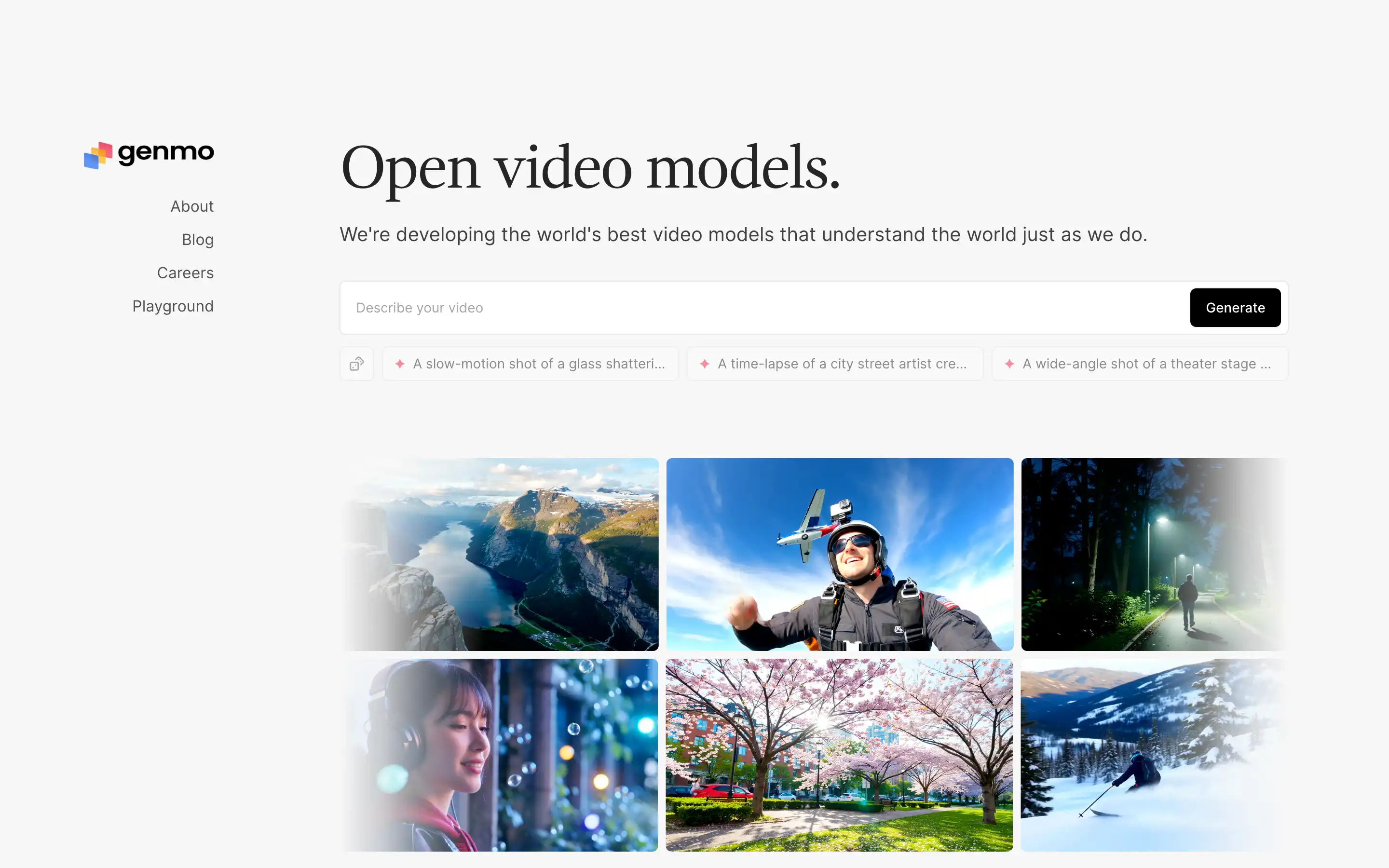

Genmo is a research lab focused on building open, state-of-the-art video generation models, with its flagship open-source text-to-video model, Mochi 1. The homepage presents Genmo’s mission as “unlocking the right brain of AGI” by turning written prompts into short, coherent video clips, and offers example prompts alongside links to download and run Mochi 1 locally via GitHub. The model can transform text descriptions into 480p videos at 30 frames per second, and is released under a permissive open-source license so developers can inspect, customize, and integrate it into their own workflows.

Beyond the model weights and quickstart scripts, Genmo provides a hosted playground where non-technical users can generate videos directly in the browser, while more advanced users can pull the model into their own infrastructure for research or production experiments. The site positions Mochi 1 as a free alternative to proprietary video models, emphasizing prompt adherence, realistic motion, and suitability for creative projects, prototyping, or synthetic data generation. Overall, Genmo serves creators, developers, and researchers who want high-quality text-to-video generation with the transparency and flexibility of open-source tools.