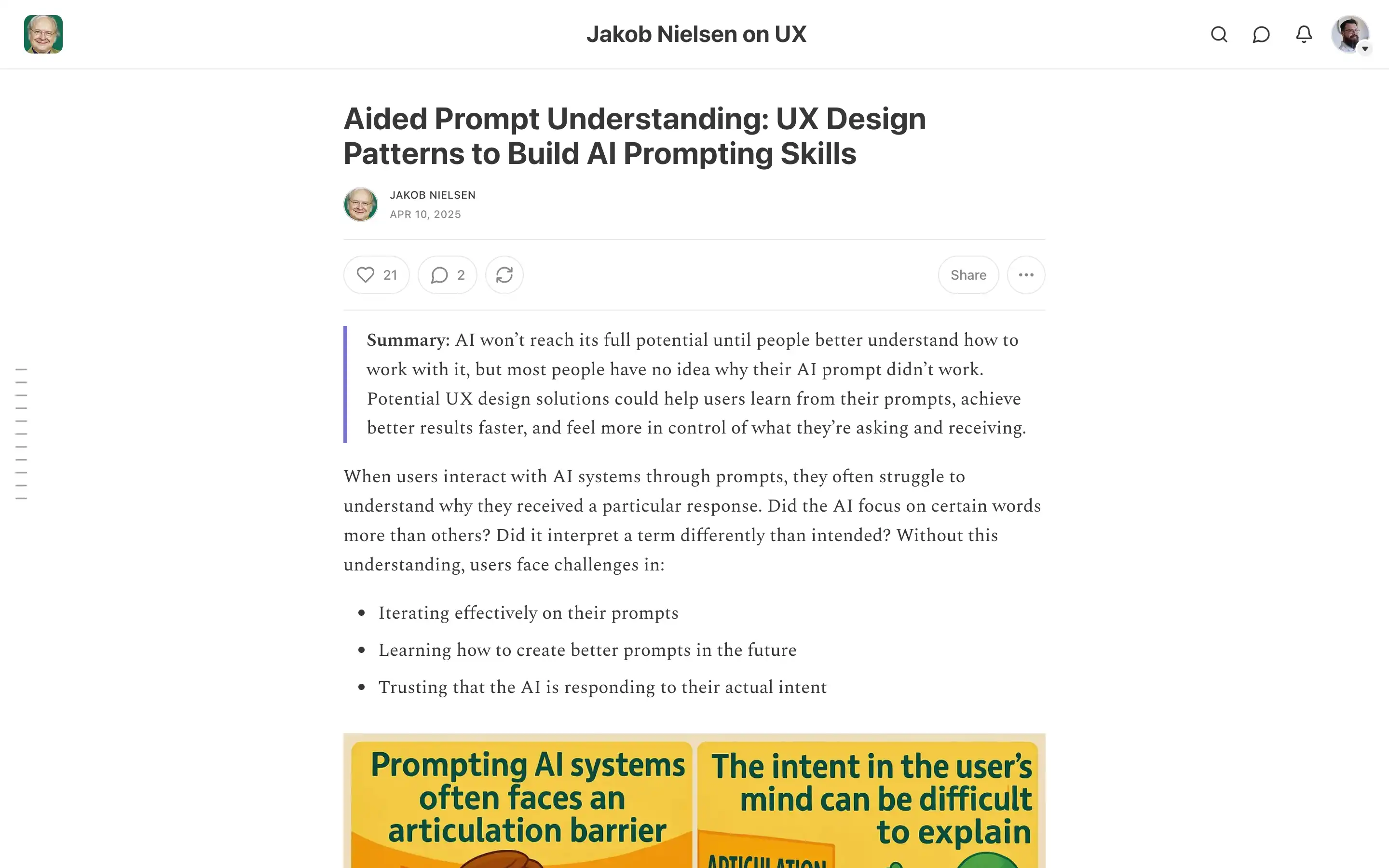

“Aided Prompt Understanding: UX Design Patterns to Build AI Prompting Skills” is an article by Jakob Nielsen that argues prompt engineering is not enough; people also need help understanding what their AI prompts actually did and why the system responded in a particular way. He frames this as an “articulation barrier”: users struggle to see which words the AI focused on, how it interpreted ambiguous terms, and why a result missed their intent, which makes it hard to iterate, learn, or trust the system. To address this, Nielsen proposes “aided prompt understanding” features—UX patterns that make the AI’s interpretation more transparent and turn each interaction into a chance to build long-term prompting skill, not just get a one-off answer.

The rest of the article surveys concrete design patterns that could provide this support, many drawn from existing tools and research prototypes: chain-of-thought summaries that expose reasoning; reverse prompting (e.g., Midjourney’s Describe) that turns outputs back into prompts; prompt feedback features (such as Hinge’s graded dating prompts); visualizations that highlight which prompt words influenced which parts of an image or text; prompt-variation tools and side-by-side comparisons; confidence indicators; real-time prompt “spell-check” for weak or unknown terms; and personalized, history-aware prompt assistance. Nielsen concludes that these ideas are promising but still rare in mainstream products, and calls for future AI interfaces that blend conversation with guided interaction so users can better understand, refine, and own their prompts over time.